Difference between revisions of "Gromacs/Acceleration and parallelization"

From Computational Biophysics and Materials Science Group

| Line 23: | Line 23: | ||

Points to note: | Points to note: | ||

* For single machine only, i.e. N<=16 | * For single machine only, i.e. N<=16 | ||

| + | * -pin on is used when N<16 | ||

==Gromacs 5.0.4 thread-MPI, double== | ==Gromacs 5.0.4 thread-MPI, double== | ||

| Line 29: | Line 30: | ||

Points to note: | Points to note: | ||

* For single machine only, i.e. N<=16 | * For single machine only, i.e. N<=16 | ||

| + | * -pin on is used when N<16 | ||

==Benchmark== | ==Benchmark== | ||

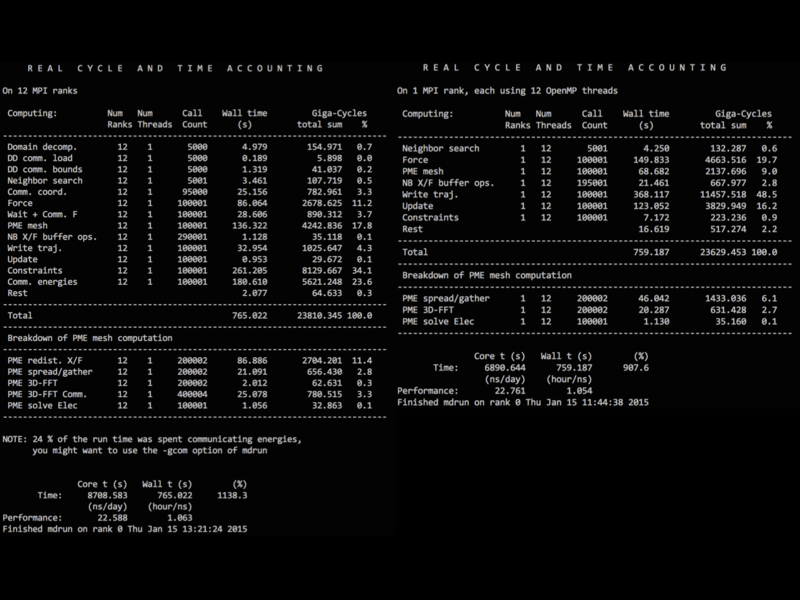

We compared difference between 12 MPI processes with 1 OPENMP thread each and 1 MPI process with 12 OPENMP threads. Though same amount of time is used, different distribution of time could be found. | We compared difference between 12 MPI processes with 1 OPENMP thread each and 1 MPI process with 12 OPENMP threads. Though same amount of time is used, different distribution of time could be found. | ||

[[File:Compare_mpi.png|800px]] | [[File:Compare_mpi.png|800px]] | ||

Latest revision as of 13:29, 16 January 2015

Read this first.

On Combo, we have compiled several builds of Gromacs. List refers to How to use Combo and compilation options refer to How to compile Gromacs.

We now provide mdrun options for each build:

Contents

[hide]Gromacs 5.0.4 MPI, single, GPU

UNDER TESTS

Gromacs 5.0.4 MPI, double

- #PBS -l nodes=1;ppn=12

mpirun mdrun - mdrun -ntomp N -pin -on

Points to note: * N is the number of cores you wish to use in case a single machine is utilitzed * Dunno why M=32 can only utilize one single node, i.e. M=48 utilizes one and half node observed from Ganglia * Therefore DO NOT specify total number of cores after mpirun. Specify using #PBS -l instead and let mpirun and mdrun decide by themselves * -pin on is used when N<16

Gromacs 5.0.4 CUDA, single

mdrun (-nt N) (-pin on)

Points to note: * For single machine only, i.e. N<=16 * -pin on is used when N<16

Gromacs 5.0.4 thread-MPI, double

mdrun (-nt N) (-pin on)

Points to note: * For single machine only, i.e. N<=16 * -pin on is used when N<16

Benchmark

We compared difference between 12 MPI processes with 1 OPENMP thread each and 1 MPI process with 12 OPENMP threads. Though same amount of time is used, different distribution of time could be found.